NVIDIA’s GH200 NVL2: What You Need to Know

A blog by Allan Kaye, Co-Founder and Managing Director at Vespertec

Release date: 17 February 2025

—

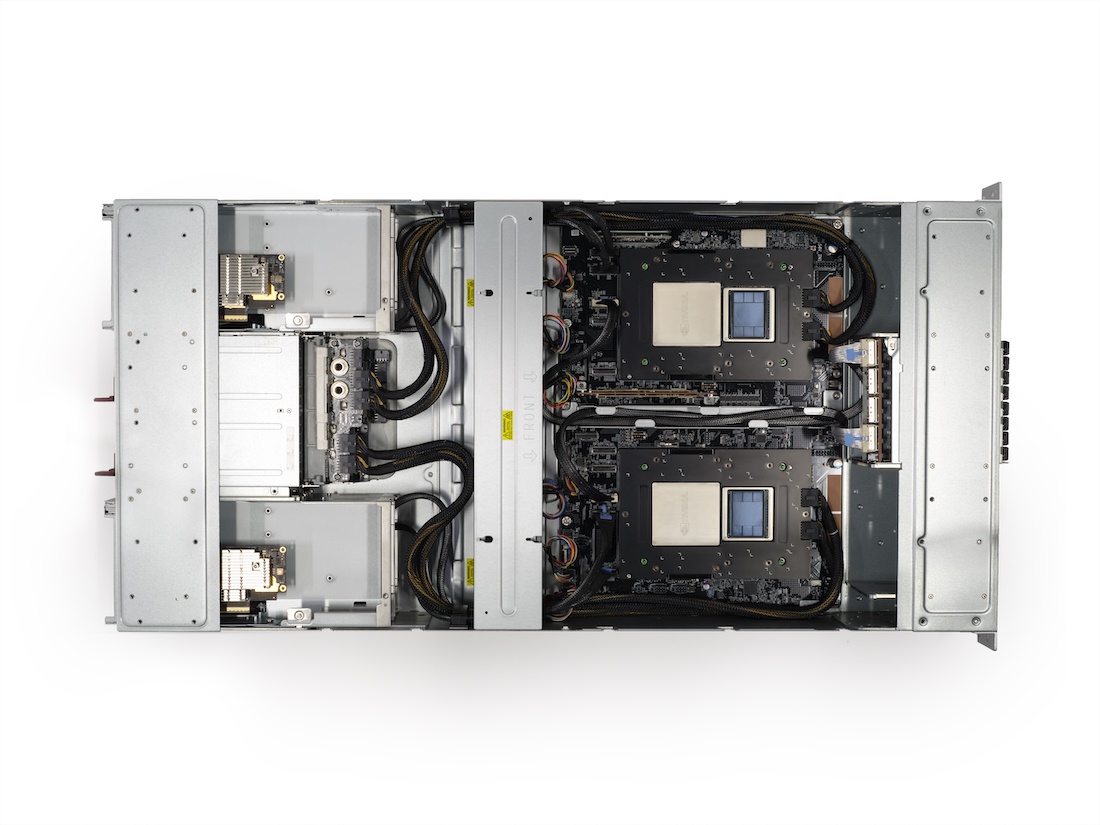

NVIDIA’s new GH200 NVL2 platform represents a sea change in how organisations can approach large-scale AI computation. With systems available as of January 2025, this dual Grace Hopper Superchip system packed into a 2U form factor delivers capabilities that were previously available only in much larger deployments. The platform’s combination of enhanced memory capacity and improved processing efficiency opens new possibilities for AI research and enterprise applications.

Architectural Innovation

The NVL2 platform is built from sophisticated dual-GPU architecture that pushes the boundaries of high-performance computing. Each 2U node houses two NVLinked Grace Hopper Superchips, creating a powerhouse system that delivers unprecedented memory capacity and bandwidth. The platform boasts 288GB of high-bandwidth memory, achieves an impressive 10 TB/s bandwidth, and incorporates 1.2TB of fast memory.

One of the most notable improvements is the inclusion of 144GB HBM3e GPUs, a significant upgrade from the 96GB HBM3 initially launched. This enhancement not only increases memory capacity but also delivers superior bandwidth, enabling more complex workloads and larger model operations.

Performance Parameters and Capabilities

The NVL2’s theoretical capabilities are particularly impressive when considering different precision scenarios:

- Full FP16 precision operations can handle models with 70-80 billion parameters, making it suitable for workloads like Llama 2 (70B).

- With 8-bit quantisation, the system could extend its reach to approximately 140-150 billion parameters.

- Implementing 4-bit quantisation might push the boundaries further, potentially accommodating models in the 280-300 billion parameter range.

Technical Architecture and Performance Optimisation

The system’s dual-GPU configuration, enhanced by the high-speed NVLink interconnect, operates with remarkable cohesion, effectively performing as a single GPU with 288GB of memory for many applications. This architectural approach offers several key advantages:

- The NVLink interconnect’s superior bandwidth compared to PCIe significantly reduces communication overhead, resulting in faster training cycles and optimal resource utilisation.

- Larger context windows enable better handling of long-sequence tasks, making the system particularly valuable for advanced AI research and complex data processing pipelines.

- The architecture’s design seamlessly accommodates both pipeline and tensor parallelism, enhancing the efficiency of large-scale model execution.

Deployment, Applications and Use Cases

By pushing the boundaries of memory capacity and processing efficiency in a compact form factor, this platform creates opportunities that were previously constrained by hardware limitations.

So, what can you use it for? Looking at the architecture, we’ve put together a few hypothetical use cases for which the system’s memory, bandwidth speed, and configuration could potentially make it a great fit.

Research and Development

The first use-case to bear in mind is model training. The NVIDIA GH200 NVL2 system enhances neural network training efficiency through its dual-GPU NVLink-C2C architecture, which provides 900GB/s memory coherence and 288GB of high-bandwidth memory (HBM3e). This setup reduces traditional memory bottlenecks and accelerates model parameter exchanges, improving performance in large-scale AI training.

Next is training optimisation. Hyperparameter tuning traditionally requires extensive computational resources and time. The NVL2’s architecture, with its high-bandwidth memory and 10 TB/s interconnect bandwidth, ensures minimal communication overhead, allowing you to explore larger hyperparameter spaces with unprecedented efficiency.

Finally, the GH200 NVL2 has some exciting implications for multi-modal AI training and inference. The platform’s 1.2TB of fast memory provides the necessary infrastructure to develop, train, and operate complex multi-modal models, supporting simultaneous processing of high-resolution image data, extensive text corpuses, and intricate audio signals without performance degradation.

Enterprise AI Applications

The GH200 NVL2’s ability to efficiently serve large language models, like Llama 2 at 70B for example, stems directly from its memory architecture. With 288GB of unified memory, enterprises can run sophisticated language models on a single 2U node, reducing infrastructure complexity and operational costs.

It’s also a powerful enabler for real-time analytics. High-velocity data processing requires both memory bandwidth and computational efficiency. The same 10TB/s bandwidth speed that makes it perfect for training optimisation also allows the system to process complex streaming data models with minimal latency.

Next, let’s think about computer vision at enterprise scale. Advanced object detection and tracking need continuous processing of high-resolution video streams. The platform’s dual-GPU configuration with 288GB memory can simultaneously analyse multiple 4K or 8K video streams, supporting security, quality control, and autonomous systems monitoring applications.

Scientific Computing

The platform’s high-bandwidth memory and parallel processing capabilities could also enable unprecedented detail in molecular dynamics simulations. Researchers can model complex molecular interactions, accelerating drug discovery and materials science research through more comprehensive computational approaches.

In climate research, simulations require processing enormous, high-resolution datasets with complex neural network models. The GH200 NVL2’s combination of fast memory and bandwidth speed could allow simultaneous global and local climate pattern analysis, supporting more nuanced predictive models that capture intricate environmental interactions.

On the genomics front, the NVL2’s architecture could support rapid sequence alignment, variant calling, and machine learning model training for protein folding predictions, dramatically accelerating genetic research timelines.

These advancements are made possible not just through raw computing power, but by the platform’s ability to slot into existing infrastructure while delivering significantly enhanced capabilities. Organisations can focus on innovation and application development rather than infrastructure overhaul, accelerating their path to AI-driven insights and solutions.

Preparing for Tomorrow’s AI Workloads

The GH200 NVL2 couldn’t have been made available at a better time for AI and HPC. As model sizes continue to grow and we shift towards an inference-first approach to data centre configurations, the platform’s enhanced memory capacity and processing capabilities provide the foundation needed for next-generation AI workloads.

This system bridges the gap between infrastructure practicality and ambition, enabling organisations to deploy increasingly sophisticated AI solutions without the traditional trade-offs between capability and manageability.

If you’d like to know more about the Grace Hopper architecture, my colleague Ben explored 3 uses cases for the Grace Hopper Superchip in his blog which you can find here.

For organisations interested in early access to the Grace Hopper Superchip architecture, we’re running a Test Drive programme to allow you to experience this platform and others like it. If you would like to find out more or be one of the first to try out this new system, please get in touch.