DGX Spark Explained: A Researcher’s Best Friend

Release date: 28 May 2025

A blog by Allan Kaye, CEO and co-founder at Vespertec

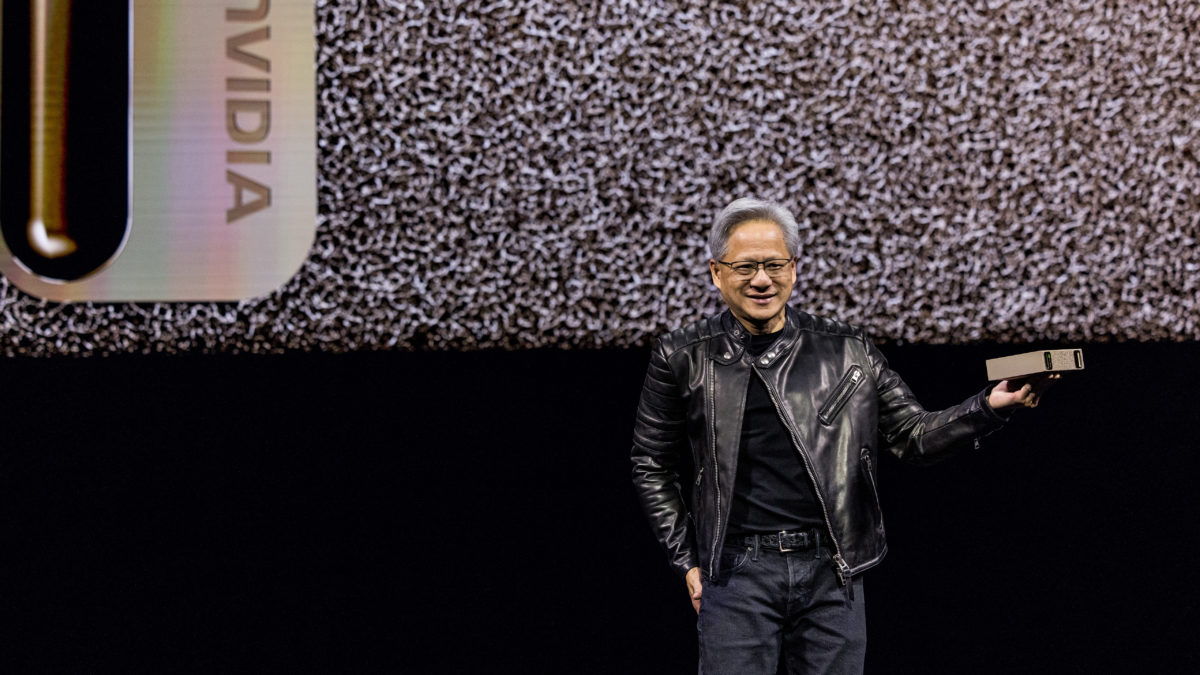

One hour and forty-seven minutes into his GTC 2025 keynote speech, Jensen Huang held up a small box the size of a Wi-Fi router. He likened it to the original DGX-1 “with Pym particles.” That box was the DGX Spark.

While NVIDIA didn’t task Ant-Man with remaking its classic deep learning system, Huang wasn’t entirely joking. DGX-1 weighed about 60kg. DGX Spark is nearly 60 times smaller, weighing in at just over 1.2kg. In fact, in its original incarnation as Project DIGITS, it was named the “World’s Smallest AI Supercomputer.” It contains a GB10 Superchip system-on-a-chip (SoC), modelling the newest Grace Blackwell architecture in miniature.

The biggest selling point of DGX Spark is that it can fit on your desk. With most other superchip-powered systems only being available in larger form factors, that’s a major advantage. At last year’s conference, NVIDIA announced the DGX GB200 NVL72 system, capping off a trend of increasingly powerful servers crammed full of compute nodes. It weighs just over 1.36 metric tons. That would do serious damage to your kitchen table.

If you find it hard to differentiate between HGX, DGX, and NVIDIA’s other product categories, we’ve created a handy explainer to decode each reference architecture.

On a more serious note, the DGX Spark’s most obvious and exciting use is for researchers looking to investigate the superchip architecture at a much lower price. With a palm-sized AI supercomputer, researchers at universities and other institutions can run pilot programmes and tests that—until now—just weren’t possible.

NVIDIA CEO Jensen Huang Presents DGX Spark. Credit: NVIDIA

Why Researchers Need Superchips

A researcher with access to high-performance compute does two things disproportionately. Run large-scale simulations, and train bespoke ML models.

Let’s take Bristol’s Isambard 3 supercomputer as an example—which, incidentally, is powered by the Arm-based Grace CPU Superchip. Isambard 3 was initially hosted by the Met Office, who used it to build better weather forecasting and climate prediction modelling. Later, it was used to run molecular level simulations on Parkinson’s disease and help develop drugs to treat osteoporosis. That kind of large-scale simulation and model training requires impressive FLOPs, fast memory access, and low-latency, high-throughput networking. That’s where NVIDIA’s superchips thrive.

An Overview of Superchips

Superchips are one of NVIDIA’s most fascinating inventions. Mixing and matching multiple CPUs and/or GPUs onto the same motherboard to achieve the best results for a given use case. That includes (but is not limited to):

- Grace Hopper Superchip (GH200) – 1x Grace CPU + 1x Hopper H200 GPU; released August 2023.

- Grace CPU Superchip (Grace-Grace) – 2x Grace CPUs via NVLink-C2C; announced March 2022.

- GB200 NVL72 Superchip System – 36x Grace CPUs + 72x Blackwell GPUs; announced March 2024

- Vera Rubin Superchip – Next-gen Grace-Blackwell successor; announced March 2025

If you’re interested in the Grace Hopper architecture, find out how the GH200 NVL2 system is perfectly suited for scientific computing and genomics workloads.

With the Grace Hopper and Grace Blackwell architectures, researchers get the best of both worlds. The combined strengths of GPUs and CPUs accelerate workloads, while NVIDIA’s NVLink–C2C connection cuts down communication time between chips.

In the latest MLPerf benchmarks, the GH200 had up to 3.5x more GPU memory capacity and 3x more bandwidth than the H100 for compute- and memory-intensive workloads. Meanwhile, the memory coherent, high-bandwidth, and low-latency interconnect offers up to 900 GB/s total bandwidth. That’s 7x greater than the x16 PCIe Gen5 lanes usually used for HPC workloads.

I can’t overstate the role networking plays here. By tying together NVIDIA’s best GPUs and CPUs in one place and bringing down inter-node communication, you get all the resources you need to handle your workload in one place.

DGX Spark (left) and DGX Station (right). Credit: NVIDIA

Making Superchips Accessible To Researchers

Superchips are excellent at what they do. But that comes at a cost. The superchip architecture is perfect for running climate simulations or fine-tuning ML models for a particular use case, but investments of that size often require a lot of organisational buy-in. Also, when it comes to purchasing equipment for the lab, the grant process throws up another hurdle.

When proposing necessary equipment for research grants, UKRI advises that individual items between £10,000 and £400,000 can be included if “the equipment is [both] essential to the proposed research [and] no appropriate alternative provision can be accessed.” DGX Spark, then, gives researchers a great way to demonstrate the value of superchip architecture upfront, strengthening the case for larger investments later.

What surprises me, though, is the cost. For what it can run, a DGX Spark priced under £3,495 is remarkable, especially compared to a GPU workstation with far less AI horsepower. This seems like a tactical move by NVIDIA to seed the market and accelerate agentic AI adoption. It’s a smart play that could quickly drive uptake among research teams, institutions, and innovators experimenting with AI at the edge.

DGX Spark: the personal AI supercomputer. Credit: NVIDIA

What Makes DGX Spark Suitable for Researchers?

Now, beyond accessibility, let’s see what makes the DGX Spark a great fit for researchers. Small as it may be, it packs a big punch. Despite displacing less water than a large water bottle, it offers 1 petaFLOP of AI compute at FP4 precision, with 128GB of LPDDR5X memory and 20 Arm cores.

These features were chosen specifically for prototyping and fine-tuning AI models, running inference, developing edge applications, and speeding up end-to-end data science workflows. All activities well within a researcher’s scope.

You can find the full specs on the NVIDIA website (and below):

- Architecture: NVIDIA GB10 Grace Blackwell superchip

- CPU: 20 core Arm, 10 Cortex-X925 + 10 Cortex-A725

- Performance: 1,000 AI TOPS of FP4 AI performance

- Memory: 128GB of coherent, unified system memory (LPDDR5X)

- NIC: 200GbE ConnectX-7 Smart NIC

- Storage: up to 4TB

- Dimensions: 150mm L x 150mm W x 50.5mm H

- Power draw: 170W

- Mass: just over 1.2kg

Software-ready Out of the Box

DGX Spark comes fully loaded with everything AI developers need to get started right away. It runs NVIDIA DGX OS, the same operating system used across the DGX family. At its core is Ubuntu, enhanced with the NVIDIA kernel, RTX GPU desktop drivers (for rendering and desktop workloads), NVIDIA DOCA for networking, and NVIDIA Docker for container support.

On top of that, it includes the CUDA toolkit, libraries, and the building blocks needed to support higher-level SDKs and frameworks available through NGC (NVIDIA GPU Cloud). Developers can join NGC and the NVIDIA Developer Program for free to access SDKs, documentation, and updates, making sure they’re always aligned with the latest DGX Spark capabilities

Clustering Out

DGX Spark doesn’t have to be just a stepping stone. ServeTheHome made an intriguing discovery in its piece on the personal AI supercomputer:

“Speaking of two, NVIDIA is selling and supporting these not just as single AI mini computers. Instead, having two in a cluster will be a sold and supported configuration.

“We asked about the ability to connect more than two. NVIDIA said that initially they were focused on bringing 2x GB10 cluster configurations out using the 200GbE RDMA networking. There is also nothing really stopping folks from scaling out other than that is not an initially supported NVIDIA configuration.”

Patrick Kennedy, ServeTheHome (20th March 2025)

So, if nothing’s stopping us from linking multiple GB10s into larger clusters, there’s a whole world to be explored in turning an initial test bed into an in-lab cluster, capable of running production-scale workloads.

Whether you’re looking into a single mini supercomputer, a cluster of two, or a larger test bed, the DGX Spark is a great way to trial the superchip architecture at an affordable price and convenient form factor.

As an NVIDIA Elite Compute Partner, and Elite Networking Partner, our team can help you navigate NVIDIA’s product portfolio and find the perfect solution for your workload.

If you’re interested in hearing more, please drop me a line or give us a ring at +44 161 947 4321.

If you’d like to hear more about the other announcements at NVIDIA GTC 2025, I broke down what the biggest server and networking releases mean for your organisation.